How Google leverages BERT depends on the use of the term ‘Passage Indexing’ (although it’s identified as a ranking update).

It’s been 12 months since Google announced a new update called BERT in production search and there should be no surprise the recent Search On event, falling almost on the eve of production BERT’s first birthday, included so much talk of huge progress and breakthroughs using AI and BERT during the past year.

A recap on what BERT is

To recap, the Google BERT October 2019 update is a machine learning update purported to help Google better understand queries and content, disambiguating nuance in polysemous words via greater understanding of “word’s meaning” (context). The initial update impacted just 10% of English queries as well as featured snippets in territories where they appeared.

Importantly, that initial BERT search update was for disambiguation primarily, as well as text extraction and summarisation in featured snippets. The disambiguation aspect mostly applied to sentences and phrases.

Within a month or so of BERT’s production search announcement, roll out began to many more countries, albeit still only impacting 10% of queries in all regions.

Initially the October 2019 announcement caused quite a stir in the SEO world, not least because, according to Google, when announcing BERT, the update represented the “biggest leap forward in the past five years, and one of the biggest leaps forward in the history of search.”

This was clearly the most important announcement since RankBrain and no exaggeration — and not just for the world of web search. Developments related to BERT during the preceding 12 months for the field of natural language understanding (a half century old area of study), had arguably moved learnings forward more in a year than the previous fifty combined.

The reason for this was another BERT — a 2018 academic paper by Google researchers Devlin et al entitled “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” Note, I will be referencing several academic papers here. You will find a list of sources and resources at the end of this article

BERT (the paper) was subsequently open-sourced for others in the machine learning community to build upon, and was unquestionably a considerable contributor to the World’s dramatic computational linguistics understanding progress.

BERT’s basic idea is it uses bi-directional pre-training on a context window of words from a large text collection (En Wikipedia and BookCorpus) using a transformer “attention” mechanism so as to see all of the words to the left and to the right of a target in a sliding context window simultaneously for greater context.

Once trained, BERT can be used as a foundation and then fine-tuned on other more granular tasks, with much research focus on downstream natural language understanding and questions and answering.

An example for clarity of the ‘context window’ for ‘word’s meaning’

Since the scope of a context window is an important concept I have provided an example for illustration:

If a context window is 10 words long and the target word is at position 6 in a sliding “context window” of 10 words, not only can BERT see words 1-5 to the left, but also words 7-10 to the right at the same time using attention “word pairs” quadratically.

This is a big advancement. Previous models were uni-directional, meaning they could only see words 1-5 to the left, but not 7-10 until they reached those words in the sliding window. Using this bi-directional nature and simultaneous attention provides full context for a given word (within the constraints of the length of the window of course).

For example, the word “bank” is understood differently if the other words within the context window also include “river” or “money.” The co-occurring words in the context window add to the meaning and suddenly “bank” is understood as being a “financial bank” or a “river bank.”

Back to the October 2019 Google BERT update announcement

The October 25, 2019, production search announcement followed what had been a frenetic BERT-focused year in the language research community.

In the period between 2018 and 2019 all manner of the Sesame Street character named BERT-type models appeared, including ERNIE, from Baidu. Facebook and Microsoft were also busy building BERT-like models, and improving on BERT at each turn. Facebook claimed their ROBERTA model was simply a more robustly trained version of BERT. (Microsoft says it’s been using BERT in Bing since April 2019,)

Big tech AI teams leapfrogged each other in various machine learning language task leaderboards, the most popular amongst them SQuAD (Stanford Question and Answer Dataset), GLUE (General Language Understanding Evaluation), and RACE (Reading Comprehension from Evaluations); beating human language understanding benchmarks as they went.

But what of 2020?

Whilst the SEO world has been quieter of late on the topic of BERT (until this month), enthusiasm in the deep learning and natural language processing world around BERT has accelerated, rather than waned in 2020.

2019 / 2020’s developments in AI and natural language understanding should absolutely make SEOs up their BERT-stalking game once more. Particularly in light of developments this week, in particular, following announcements from Google’s Search On online event.

BERT does not always mean the ‘BERT’

An important note before we continue:

“BERT-like” — a descriptive term for pre-training a large unlabelled text model on “language” and then using transfer learning via transformer technologies to fine-tune models utilizing a range of more granular tasks.

Whilst the 2019 Google update was called BERT, it was more likely a reference to a methodology now used in parts of search and the machine learning language field overall rather than a single algorithmic update per say, since BERT, and BERT-like even in 2019 was becoming known in the machine learning language world almost as an adjective.

Back to Google’s AI in search announcements

“With recent advancements in AI, we’re making bigger leaps forward in improvements to Google than we’ve seen over the last decade, so it’s even easier for you to find just what you’re looking for,” said Prabhakar Raghavan during the recent Search On event.

And he was not exaggerating, since Google revealed some exciting new features coming to search soon, including improvements to mis-spelling algorithms, conversational agents, image technology and humming to Google Assistant.

Big news too on the BERT usage front. A huge increase in usage from just 10% of queries to almost every query in English.

“Today we’re excited to share that BERT is now used in almost every query in English, helping you get higher quality results for your questions.”

(Prabhakar Raghavan, 2020)

Passage indexing

Aside from the BERT usage expansion news, one other announcement in particular whipped the SEO world up into a frenzy.

The topic of “Passage Indexing,” whereby Google will rank and show specific passages from parts of pages and documents in response to some queries.

Google’s Raghavan explains:

“Very specific searches can be the hardest to get right, since sometimes the single sentence that answers your question might be buried deep in a web page. We’ve recently made a breakthrough in ranking and are now able to not just index web pages, but individual passages from the pages. By better understanding the relevancy of specific passages, not just the overall page, we can find that needle-in-a-haystack information you’re looking for. This technology will improve 7 percent of search queries across all languages as we roll it out globally.”

(Prabhakar, 2020)

An example was provided to illustrate the effect of the forthcoming change.

”With our new technology, we’ll be able to better identify and understand key passages on a web page. This will help us surface content that might otherwise not be seen as relevant when considering a page only as a whole….,” Google explained last week.

In other words, a good answer might well be found in a single passage or paragraph in an otherwise broad topic document, or random blurb page without much focus at all. Consider the many blog posts and opinion pieces for example, of which there are many, with much irrelevant content, or mixed topics, in a still largely unstructured and disparate web of ever increasing content.

It’s called passage indexing, but not as we know it

The “passage indexing” announcement caused some confusion in the SEO community with several interpreting the change initially as an “indexing” one.

A natural assumption to make since the name “passage indexing” implies…erm… “passage” and “indexing.”

Naturally some SEOs questioned whether individual passages would be added to the index rather than individual pages, but, not so, it seems, since Google have clarified the forthcoming update actually relates to a passage ranking issue, rather than an indexing issue.

“We’ve recently made a breakthrough in ranking and are now able to not just index web pages, but individual passages from the pages,” Raghavan explained. “By better understanding the relevancy of specific passages, not just the overall page, we can find that needle-in-a-haystack information you’re looking for.”

This change is about ranking, rather than indexing per say.

What might those breakthroughs be and where is this headed?

Whilst only 7% of queries will be impacted in initial roll-out, further expansion of this new passage indexing system could have much bigger connotations than one might first suspect.

Without exaggeration, once you begin to explore the literature from the past year in natural language research, you become aware this change, whilst relatively insignificant at first (because it will only impact 7% of queries after all), could have potential to actually change how search ranking works overall going forward.

We’ll look at what those developments are and what might come next.

Passage indexing is probably related to BERT + several other friends… plus more new breakthroughs

Hopefully more will become clear as we explore the landscape below since we need to dig deeper and head back to BERT, the progress in NLP AI around big developments closely related to BERT, and in the ranking research world in the last year.

The information below is mostly derived from recent research papers and conference proceedings (including research by Google search engineers either prior to working at Google, or whilst working at Google) around the information retrieval world, (the foundational field of which web search is a part).

Where a paper is referenced I have added the author and the year despite this being an online article to avoid perception of rhetoric. This also illustrates more clearly some of the big changes which have happened with indication of some kind of timeline and progress leading up to, and through 2019 and 2020.

Big BERT’s everywhere

Since the October 2019 announcement, BERT has featured EVERYWHERE across the various deep learning research industry leaderboards. And not just BERT, but many BERT-like models extending upon or using a BERT-like transformer architecture.

However, there’s a problem.

BERT and BERT-like models, whilst very impressive, are typically incredibly computationally expensive, and therefore, financially expensive to train, and include in production environments on full ranking at scale, making the 2018 version of BERT an unrealistic option in large scale commercial search engines.

The main reason is BERT works off transformer technology which relies on a self-attention mechanism so each word can gain context from seeing the words around it at the same time.

“In the case of a text of 100K words, this would require assessment of 100K x 100K word pairs, or 10 billion pairs for each step,” per Google this year. These transformer systems in the BERT world are becoming ubiquitous, however this quadratic dependency issue with the attention mechanism in BERT is well known.

More simply put: the more words added to a sequence, the more word combinations need to be focused on all at once during training to gain a full context of a word.

But the issue is “bigger is definitely better” when it comes to training these models.

Indeed, even Jacob Devlin, one of the original BERT authors in this presentation on Google BERT confirms the effect of the model size with a slide saying; “Big models help a lot.”

Big BERT-type models mostly have seemed to improve upon SOTA (State of the Art) benchmarks simply because they are bigger than previous contenders. Almost like “Skyscraper SEO” which we know is about identifying what a competitor has already and “throwing another floor on (dimension or feature),” to beat by simply doing something bigger, or better. In the same way, bigger and bigger BERT-like models have been developed merely by adding more parameters and training on more data in order to beat previous models.

Huge models come from huge companies

The most impressive of these huge models (i.e. those which beat SOTA (State of the Art) on the various machine learning leaderboards tend to be the work of research teams at the huge tech companies, and primarily the likes of Microsoft (MT-DNN, Turing-NLG), Google (BERT, T5, XLNet), Facebook (RoBERTa), Baidu (ERNIE) and Open AI (GPT, GPT-2, GPT-3).

Microsoft’s Turing-NLG recently dwarfed all previous models as a 17 billion parameter language model. It’s used in Bing’s autosuggest and other search features. The number of parameters is illustrated in the image below and shows Turing-NLG compared to some of the other industry models.

GPT-3

Even 17 billion parameters is nothing though when compared with OpenAI’s 175 billion parameter language model GPT-3.

Who can forget the sensationalized September 2020 Guardian newspaper piece about GPT-3 designed to shock, entitled “This entire article was written by a robot. Are you scared yet human?“

In reality this was merely next sentence prediction at massive scale, but to the layperson unaware of developments underway in the natural language space, it is no wonder this article was met with such a kerfuffle.

Google T5

Google’s T5 (Text-to-Text Transfer Transformer), (a more recent transformer based language model than BERT), released in February 2020, had a mere 11 billion parameters.

This was despite being pre-trained by a Google research team on a text collection made up of a huge web crawl of petabytes of billions of web pages dating back to 2011 from The Common Crawl, and aptly named C4, because of the four C’s in the name ‘Colossal Clean Crawled Corpus, due to its size.

But with big and impressive models comes expense.

BERT’s are expensive (financially and computationally)

The staggering cost of training SOTA AI models

In an article entitled “The Staggering Cost of Training SOTA (State of The Art) AI Models,” Synced Review explored the likely costs involved in training some of the more recent SOTA NLP AI models with figures ranging from hundreds per hour (and training can take many hours), to hundreds of thousands total cost to train a model.

These costs have been the subject of much discussion, but it is widely accepted, regardless of the accuracy of third party estimations, the costs involved are extortionate

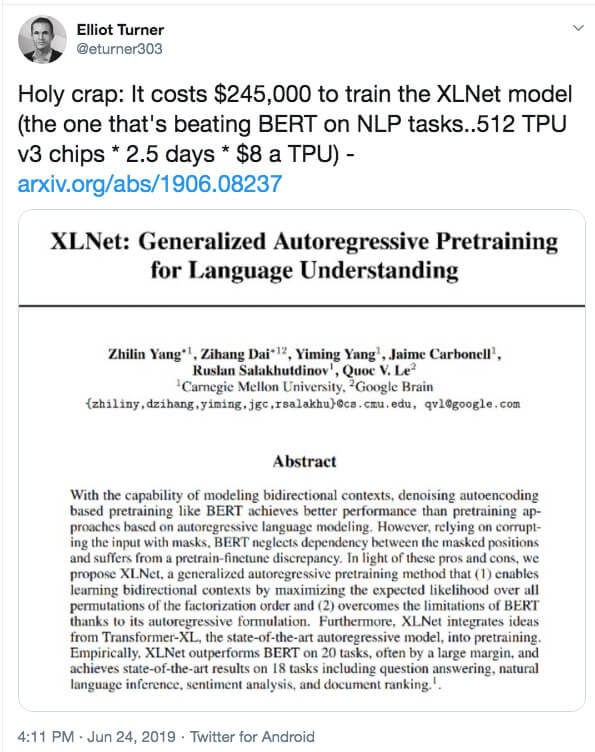

Elliot Turner, founder of AlchemyAPI (Acquired by IBM Watson) surmised the cost to train XLNet (Yang et al, 2019), a combined work between Google Brain team and Carnegie Mellon released in January 2020, was in the region of $245,000.

This sparked quite a discussion on Twitter, to the point where even Google AI’s Jeff Dean chipped in with a Tweet to illustrate the offset Google were contributing in the form of renewable energy:

And herein lied the problem, and likely why BERT was only used on 10% of queries by Google at production launch in 2019, despite the territorial expansion.

Production level BERT-like models were colossally expensive from both a computational and financial perspective